Three dimensional representations are becoming ever more important in visual communication. In today’s post, we examine some of the methods that are used to capture and represent 3-d images.

There are many different ways to record and use depth in visual imagery. There are several depth-enabled formats that are simply extensions of single still images, and we’ll take a quick look at those here. Each of these makes use of multiple images that are combined to create a three-dimensional rendering. And each of these is gaining acceptance as a technique that reaches beyond a particular piece of software or manufacturer. Note that it’s also possible for each of these techniques to be used for motion images as well.

Stereoscopic pairs

Dimensional imaging has long been possible through the use of stereoscopic pairs, one for each eye. These images produce the illusion of depth when properly viewed, such as on a stereo TV, headset, GAF ViewMaster or other binocular viewing device. The Realist format slide in the banner of this post is an example of a popular mid-century stereo format.

Mercator projections and spherical images

Depth information can also be captured and represented as a spherical panorama. This was a common use of Quicktime VR in the early days of digital imaging. Spherical imaging is now possible with smartphones and dedicated cameras like the Ricoh Theta. There are two primary ways that these images are saved. A Mercator projection “unwraps” a sphere onto a rectangle. When viewed with the proper software, the image is wrapped back into a spherical model.

Spherical images may also be represented as twin 180+ degree fisheye images. Again, these can be wrapped onto a sphere in software for a more compelling viewing experience.

A Mercator projection, created as a still by the Rich Theta, shows how a spherical image can be unwrapped to fit into a rectangular frame.

This is the way the Ricoh Theta saves a video as a pair of “bubbles” They are seamed back together in playback.

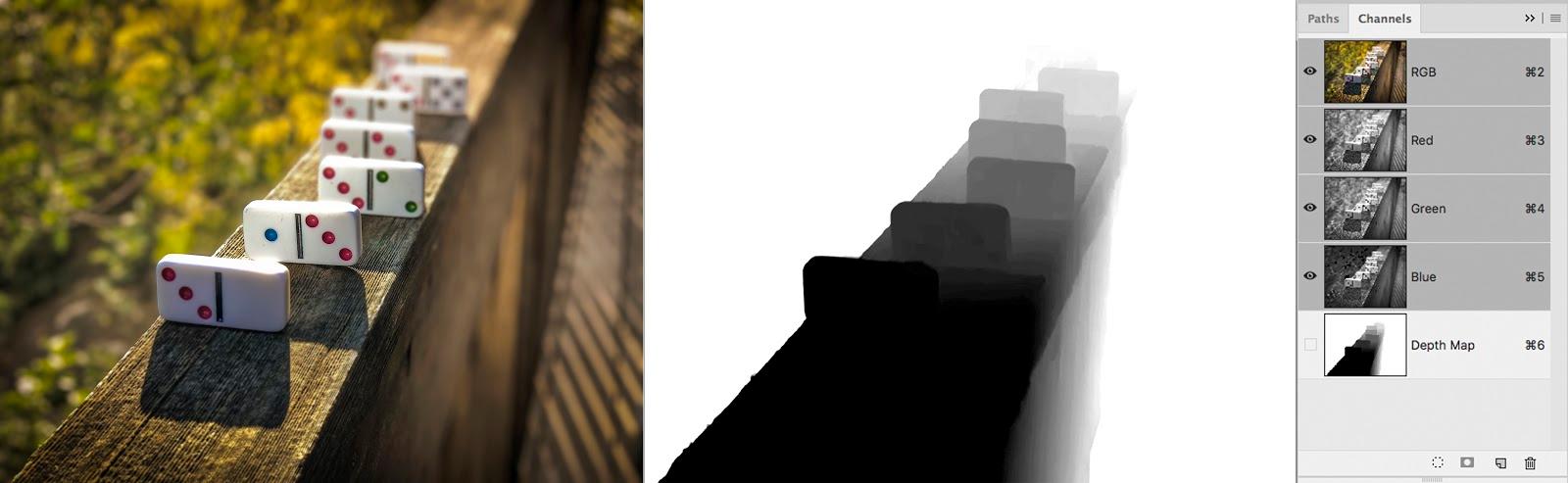

Alpha channel depth maps

And finally, depth information can be represented by an alpha channel which translates relative distance into light and dark tones. This method is used by both Apple and Android phones, and is likely to be more dominant than the methods outlined above. There are two reasons for this. The first is that these can be created almost instantly with ordinary camera phones. The second is that there are a variety of use cases as shown below.

Here’s an example of an alpha channel used as a depth map. Darker items are closer than lighter ones. You can also see the channels panel in Photoshop.This technique has been in use for a while in several applications. New depth-aware phones can create these automatically. Photo courtesy of Colleen Wheeler/www.Deke.com

- Selective depth of field - Phones that offer “portrait mode” are making use of the depth map to create a narrow depth of field synthetically. The depth map can be used to add blur to parts of the image that are farthest from the face, or darken the background, producing more dramatic portraits.

- Integration with Augmented Reality - AR typically uses geometrical awareness to place objects in a scene. To do this, the AR device needs to be able to define dimensional relationships in a scene and have an object fit naturally in that scene. Depth maps allow the AR device to understand the subject of the photo as a three dimensional object and more easily place it in a believable fashion.

- Other AI functionality - There are all kinds of tricks that can be done when depth information is included in an image. Backgrounds can be removed, synthetic lighting can be applied as well as other transformation of proportions. Put more simply, adding depth information to images will open up an entire new world of imaging possibilities that are hard to even imagine at the moment.

How are depth maps captured?

There are several ways to capture depth maps, but one is becoming common on smartphones. Smartphone cameras can rapidly refocus and capture multiple images in a fraction of a second. These images can be processed to find areas that are in focus. Because the camera knows where it is focused as it shoots, a “map” can be made of the relative distance of elements in the photo.

RGB-D

All of the above methods for describing depth fit into the generic category of Red, Green, Blue, Depth (RGB-D). This designation is a catch-all term for digital images that have an integrated depth component, although it usually implies the use of a depth map. It was first popularized in describing the way Microsoft Kinect “sees” depth information.

There are a number of other ways to represent depth digitally. Point clouds are sets of three dimensional coordinates that can be used to construct Virtual Reality spaces.

In the next post, we will look at cloud connectivity and an emerging component in visual imagery.